Welcome to Binary Circuits’ 27th weekly edition

Your weekly guide to most important developments in technological world

Dear Readers,

Binary Circuit investigates trends, technology, and how organizations might profit from rapid innovation. GreenLight, the brain behind Binary Circuit, finds possibilities, analyzes challenges, and develops plans to fit and grow firms to stay ahead. Looking to scale your business, partners, or AI/technology integration.

This week, we discuss AI era will be defined by human choices and not by code; AI advances cancer care through early intervention and sparks a new race centered on efficiency and environmental responsibility rather than scale.

The Era of AI Will Be Shaped Not by Code, but by the Decisions We Make

As AI rapidly enters into global economies, the 2025 Human Development Report talks about a shift that signifies AI not as a technological event, but as a policy and values-driven inflection point.

Global HDI (Human Development Index) reached its highest level ever in 2024, yet the rate of progress reached lowest in 35 years. At the same time, the gap between very high and low HDI countries is widening. The reason is not a failure of innovation, but a failure to align innovation with inclusive AI growth.

Tech is growing fast, but without proper systems, it deepens inequality. A global survey shows around 20% are currently using AI, and nearly two-thirds expect to use it soon—especially in education, health, and work across all HDI levels.

AI as an amplifier, not an equalizer

This report introduces the concept of a complementarity economy, where AI is used to augment not replace human potential. In radiology, for instance, AI enhances diagnostics, yet human judgment remains irreplaceable. In call centers, AI tools accelerate training but don’t diminish the need for empathy or problem-solving. But this promise is not distributed equally.

The majority of AI development both in model training and market alignment is happening in and for very high HDI countries. Meanwhile, low HDI countries remain data-scarce, infrastructure-poor, and excluded from global AI governance frameworks.

This asymmetry is also seen within countries. Even in high-income economies, men are more likely than women to use generative AI in professional settings, despite similar educational credentials. And in these same contexts, youth mental well-being is deteriorating, in part due to hyper-digitization and overexposure.

Implications for investors and policymakers

Geoeconomic risk: AI concentration risks deepening digital inequality, leaving many nations dependent and excluded

Workforce imbalance: Without human-centered design, AI may fuel underemployment and social instability

Mental health strain: Rising digital stress among youth threatens future productivity, especially in aging economies

Policy reset: Innovation must be judged not just by speed or scale, but by its real impact on human development

The coming years offer two paths:

- A tech future governed by code, scale, and capital concentration; or

- A human-centered future defined by inclusivity, ethical design, and institutional adaptability

The real risk isn't AI, but weak moral and political decisions. The future of AI will mirror the choices we make.

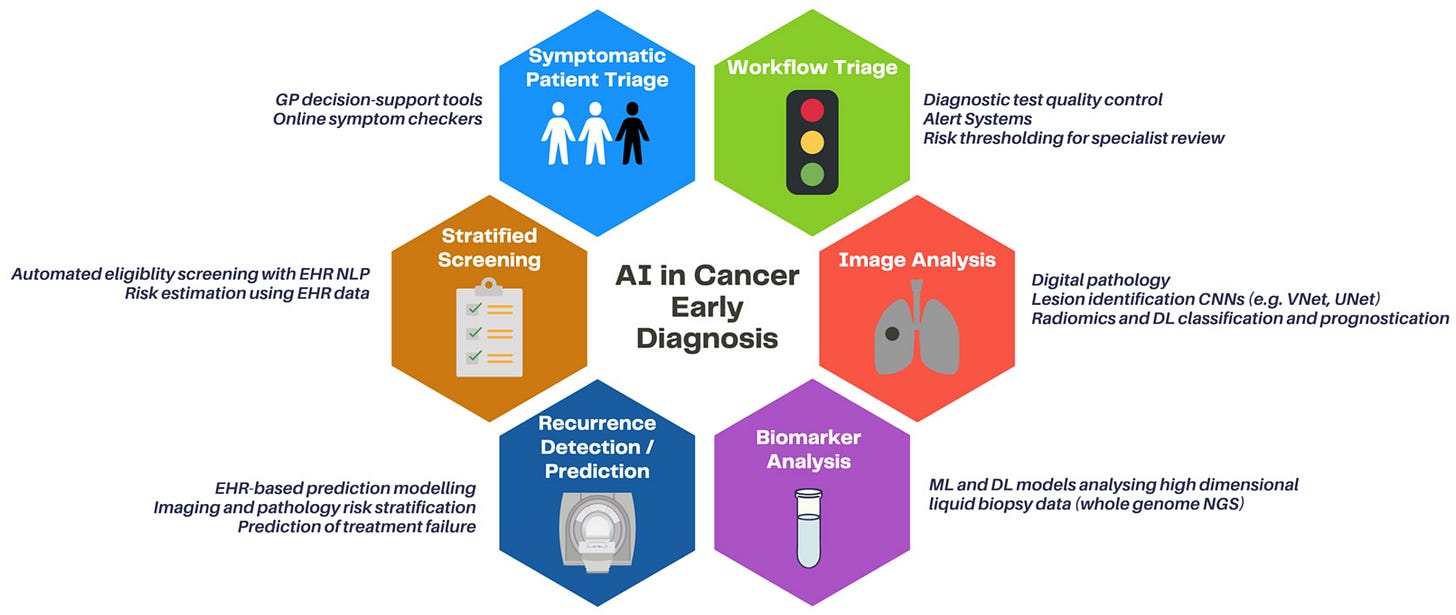

AI Is Transforming Cancer Care by Enabling Earlier Risk Detection and Preventive Action

Early detection in cancer has been limited by human perception and risk modelling for a long time. Doctors rely on radiology tests and other statistical frameworks found in family history, age or genetic predisposition. But sometimes the diagnosis is not timely or missed completely..

FDA’s recent approval of Clairity Breast, the first AI tool to predict a woman’s five-year breast cancer risk from a routine mammogram, is more than a regulatory milestone. It’s a signal that AI is moving upstream—from diagnostic support to predictive guidance.

Unlike traditional models, Clairity’s algorithm detects subtle, high-dimensional patterns in imaging data. Shift marks the early stages of a strategic realignment in healthcare logic. Instead of responding to disease, systems are beginning to pre-position around probabilistic risk—pushed forward by AI models trained on vast datasets and able to detect risk trajectories early.

Few developments include:

Dxcover’s AI-powered multiomic cancer test provides early detection across multiple cancers through a single blood sample

Garvan Institute’s AAnet model differentiates and characterises tumor cell subtypes in breast cancer, supporting multi-targeted treatment

City of Hope’s generative AI platform integrates across clinical decision layers—from trial matching to operational planning

Collectively, these tools demonstrate a trend: AI is not simply assisting clinicians—it’s building new layers of anticipatory intelligence into cancer detection.

Points to consider:

Risk is no longer one-size-fits-all; it’s personal, data-driven, and image-based

AI can scan thousands, spotting diseased states before symptoms appear

If doctors can’t see what AI sees, explainability becomes non-negotiable

Predictive AI could spark action before diagnosis, blurring clinical lines

New AI race is not about scale - it’s about efficiency and emissions

For years, the generative AI race has been driven by a single assumption: bigger is better. Larger models, more parameters, and billion-dollar training runs became the norm. But this approach is now being questioned, as it comes with high costs and growing environmental concerns.

MiniMax-M1, a Chinese-developed open-source model that delivers performance on par with closed-source giants—at a training cost of just $534,700. With support for 1 million input tokens, state-of-the-art reasoning in mathematics and code generation, and a slim compute footprint, MiniMax-M1 has rewritten the cost-performance equation. In contrast, comparable models like DeepSeek R1 were trained at a cost of $5.6 million, and GPT-4 reportedly exceeded $100 million. In effect, MiniMax is showing that architectural intelligence now rivals parameter scale as a competitive differentiator.

MiniMax-M1 consumes only 25% of the floating point operations (FLOPs) required by DeepSeek R1 at a generation length of 100,000 tokens.

MiniMax-M1 outperformed closed-source competitors in mathematical reasoning and code generation, scoring 86% on AIME 2024.

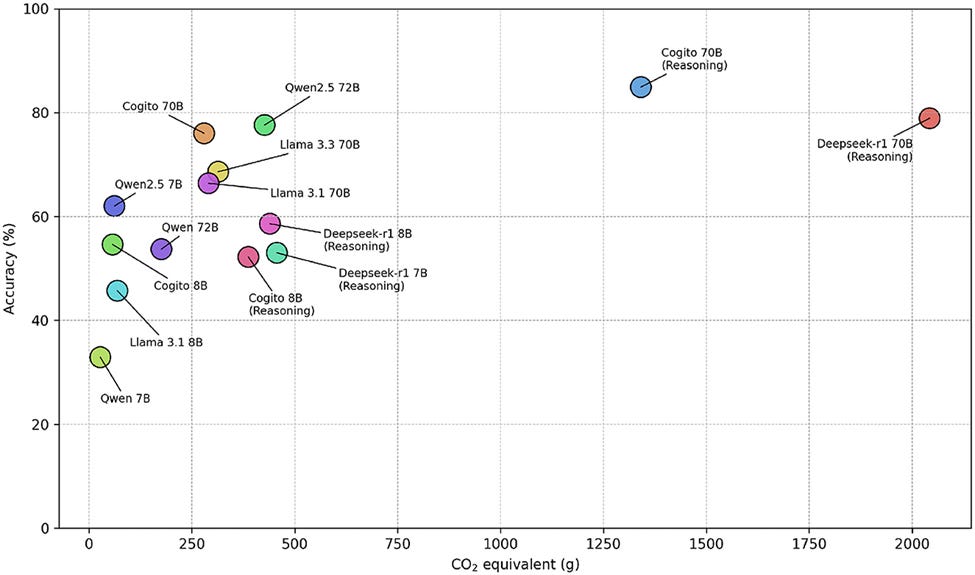

Another dimension to this shift is environmental cost. A comprehensive benchmark analysis of 1,000-question performance evaluations reveals the steep energy and emissions impact of reasoning-focused large language models. For example, Deepseek-R1 70B delivered 78.9% accuracy—yet emitted 2,042.4 g CO₂eq per run, a 74x increase compared to smaller models like Qwen 7B. Even high-performing reasoning-enhanced models, such as Cogito 70B, while achieving 84.9% accuracy, generated large token volumes and emissions—pointing to a growing tension between intelligence and sustainability.

Combined CO2 eq emissions (measured in grams) and overall accuracy of each LLM across 1,000 questions. The results illustrate trade-offs between model size, reasoning depth, and environmental impact.

As AI systems are deployed at scale—in education, healthcare, legal reasoning, and scientific discovery—token generation and response verbosity become environmental liabilities. Cogito 8B generated over 37,000 tokens on a single abstract algebra question. In contrast, Qwen 2.5 72B matched 77.6% accuracy in multiple-choice tests with just 1.0 word per answer, consuming a fraction of the energy.

The AI race is no longer just a competition of capability—it is becoming a test of constraint. The winners will be those who can achieve high reasoning performance under tight compute and emissions budgets.

Chart of the week:

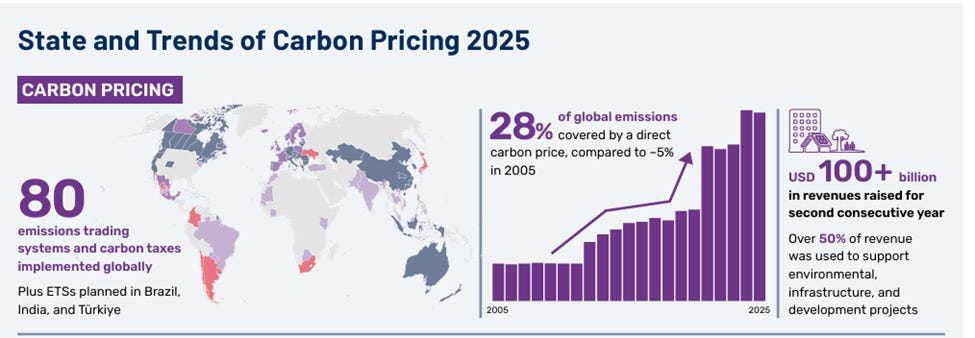

Carbon pricing crosses $100B—But is it enough to catch up with rising CO₂?

For thousands of years, Earth’s CO₂ levels stayed stable at ~280 ppm. Today, they’ve surged past 430 ppm, a 50%+ increase driven largely by fossil fuel combustion. The science is clear, and the urgency is real.

In response, governments are finally putting a price on carbon.

According to the World Bank’s 2025 report, carbon pricing tools like taxes and emissions trading systems generated $100+ billion in 2024 - up from just a handful of systems in 2005 to 80 in operation globally today.

These mechanisms now cover 28% of global emissions, signaling growing momentum toward market-driven climate action. But-

Transport and agriculture—major emission sources—remain largely unpriced. CO₂ levels are still climbing, and so is climate volatility.

The question isn’t whether carbon pricing works—it’s whether we’re scaling it fast and wide enough. Cutting energy waste, switching to renewables, and preserving forests are no longer optional they’re essential for any credible climate strategy.

Sound Bite:

Novo Nordisk plans to launch its blockbuster drug Wegovy in India, aiming for ₹8,600 crore in sales over 5–7 years. With its “20-20” strategy focused on obesity and heart health, Novo is eyeing the top spot in India’s prescription drug market

Amazon will invest $233M+ in 2025 to expand its Indian logistics network. Expect faster fulfillment, improved infrastructure, and service coverage to every PIN code in the country

UAE invites AI to the cabinet table. The UAE’s National AI System will become an official Cabinet advisor in 2025, also joining the Ministerial Development Council and federal board meetings

Japan is building FugakuNEXT, a next-gen supercomputer projected to be 1,000× faster than existing systems. Powered by FUJITSU-MONAKA3 CPUs, it aims to lead in AI and scientific simulations