Welcome to Binary Circuits’ 22nd weekly edition

Your weekly guide to most important developments in technological world

Dear Readers,

Binary Circuit investigates trends, technology, and how organizations might profit from rapid innovation. GreenLight, the brain behind Binary Circuit, finds possibilities, analyzes challenges, and develops plans to fit and grow firms to stay ahead. Looking to scale your business, partners, or AI/technology integration.

This week, we discuss how AI agents are becoming common, with DevOps as an early example; AI advancing radiology in emerging countries: and quantum computing reaching a critical stage.

Let’s dive in.

AI agents for specialized tasks are poised to become common. DevOps is arguably the first example

In the race to dominate AI, major tech companies appear to be developing similar multimodal models, trained on comparable data with the same objectives for various tasks, including chat, coding, image generation, and reasoning. As a result, these models can perform many functions with a certain degree of competence. Still, they may not possess the intelligence to handle specific tasks at an expert human level. This limits innovation and raises systemic risk. AI development will inevitably shift from being proficient at general tasks to excelling in specialized functions.

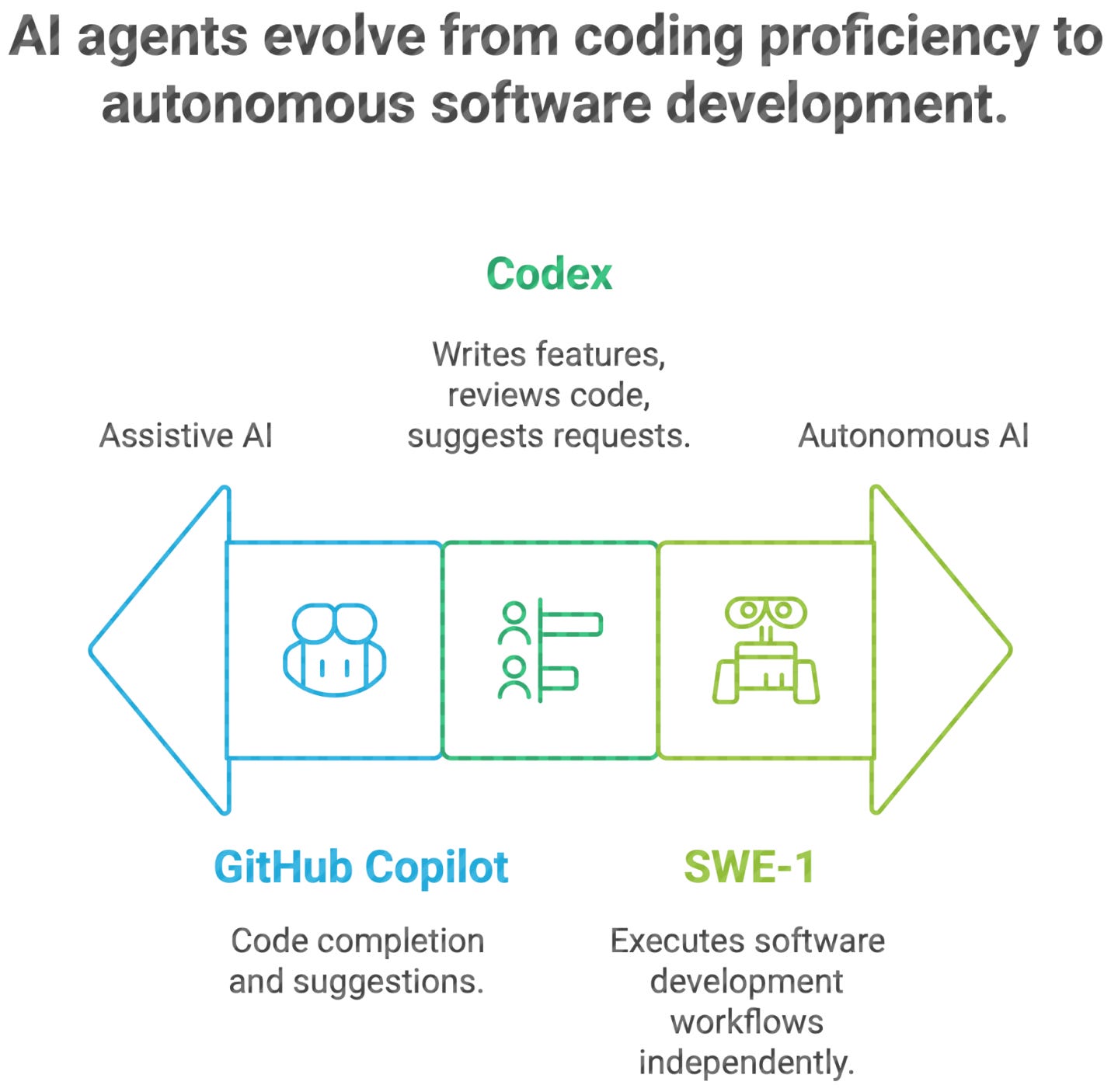

AI agents in software development might be the first area to eliminate human intervention among various tasks. Code completion tools like GitHub Copilot have evolved into sophisticated AI systems managing full-cycle engineering tasks. New entrants—Codex and SWE-1—signal a shift from assistive AI to autonomous agents for the entire software development lifecycle.

SWE-1, a family of frontier-class AI models, is designed for software engineering. Unlike general-purpose language models fine-tuned for coding, SWE-1 was built to understand and execute software development workflows. It addresses tasks like architecture planning, dependency management, bug triaging, and long-running tasks in enterprise environments.

On the other hand, Codex, currently in research preview, is OpenAI’s latest cloud-based engineering agent, powered by Codex-1, a specialized version of its O3 model. Codex is designed to operate like an autonomous teammate. Like human teams in software development, it writes new features, reviews existing code, suggests pull requests, and iterates on test cycles until it passes.

SWE-1 and Codex are not limited to proficiency in coding. They also excel in context awareness, workflow, and project goals. They generate code, manage tasks, understand version histories, and align with best practices, redefining DevOps. AI systems are becoming full-stack contributors.

DevOps teams and engineering leaders must now adapt to accommodate intelligent, parallel-working agents.

Companies should pay attention:

Invest in domain-specific models.

Experiment with alternative architectures.

Explore non-standard training data and fine-tuning regimes.

The future of AI doesn’t have to be a single, converged superintelligence. It can—and should—be a combination of complementary intelligences.

AI could have a profound impact on radiology, particularly in emerging countries with limited healthcare infrastructure

Radiologists all over the world are in short supply. The problem is more acute in emerging countries like India, where only 10,000 to 15,000 specialists serve a population of 1.4 billion. In the Western world, shifting healthcare from a reactive to a proactive model, which requires more diagnostic tests to prevent illness, is increasing the demand for radiologists. In this context, intelligent tools are essential, as they help reduce workload, expedite diagnosis, and enhance accuracy.

As of now, the U.S. FDA has cleared over 1000 AI clinical applications, with radiology comprising over 70% of these approvals. Many tools target specific tasks like spotting lung nodules, identifying breast lumps, or highlighting scan anomalies. Their strength lies in pattern recognition and is most valuable when used in conjunction with medical experts.

Google has introduced MedGemma, an open suite of models for multimodal medical text and image comprehension. Google’s previous solution, MedLM, a large language model fine-tuned for radiology, demonstrated near-expert performance in interpreting chest X-rays. Collab-CXR, a benchmarking dataset that involves 227 radiologists reviewing 324 real-world chest X-ray cases, is particularly helpful in detecting medical conditions from X-ray images. Particularly in high-volume, resource-constrained environments, AI-augmented radiologists in the study made faster and more accurate diagnoses across over 100 chest diseases.

Crucial for complex vascular and orthopedic operations, GE HealthCare's CleaRecon DL received FDA and CE marks in April 2025 for significantly lowering image artifacts in “Cone Beam” CT scans.

What does this matter, and what’s next?

AI is now proven to:

Close the knowledge gap in underprivileged areas.

Improve clinical confidence by reducing missed diagnoses.

Enhancing system efficiency and patient outcomes by simplifying the process.

Success depends on validating AI tools in practical environments, ensuring openness, and leveraging multiple clinical data sources. The next frontier is responsibly scaling these innovative tools and building new ones.

The race for quantum advantage enters a critical development period

Recent months show strategic shifts, record investments, and technical milestones emphasizing quantum momentum computing.

Tech giants like IBM, Google, Microsoft, Amazon, and Intel, along with emerging players such as IonQ, Pasqal, and Quantinuum, are creating bold roadmaps.

Industry momentum is robust. Two recent developments are Google's Willow chip and Quantinuum's 12 logical qubits at 99.9% accuracy. IonQ is preparing its systems for deployment in data centers mounted on racks. Pasqal and Quantinuum are also expanding for business uses in finance, climate science, and logistics.

Established players are making quick progress. By 2025, IBM aims to scale its Heron processor to more than 4,000 qubits, enabling the creation of a modular quantum supercomputer. By 2029, Google wants to have its Sycamore and Willow chips for a fault-tolerant quantum system. Intel is focusing on spin qubits compatible with current CMOS architecture, while Microsoft is investing in Majorana-based topological qubits.

NVIDIA is the latest contender. The GPU giant is planning to invest $700 million in PsiQuantum, a photonic quantum computing startup. Unlike other startups, it uses conventional semiconductor methods and is partnering with the U.S. and Australian governments to build quantum computers in Chicago and Brisbane. Nvidia is also launching a research lab in Boston with Harvard and MIT, highlighting the growing strategic importance of quantum technology.

Companies are taking different technological pathways:

Superconducting qubits (IBM, Google, Rigetti) advance error correction codes and modular architectures for thousands of interconnected qubits.

Photonic qubits (PsiQuantum) use semiconductor processes for scalability and integration.

Trapped ions (IonQ, Quantinuum, Oxford Ionics) and neutral atoms (Pasqal) push for higher-fidelity logical qubits and robust error correction, with Pasqal targeting 10,000 qubits.

Quantum annealing (D-Wave) provides practical value in logistics and optimization.

Spin qubits (Intel) ensure compatibility with CMOS infrastructure and progress toward mass production.

Financial services, logistics, and drug discovery are emerging as early beneficiaries. A quantum advantage is expected within five to ten years, although some companies already claim a real-world impact.

Chart of the week:

EV adoption is reshaping global oil demand.

Electric car sales are increasing rapidly, with nearly 58 million on the road by 2024 and projected to reach over 40% of global sales by 2030.

The growing adoption of EVs is already clearly impacting oil demand. In 2024, EVs reduced oil use by more than 1.3 million barrels per day (mb/d)—approximately equivalent to the amount of oil used by Japan's entire transportation sector. By 2030, EVs are expected to displace over 5 million barrels per day (mb/d) globally, with China alone accounting for half of that reduction, thanks to its large and growing fleet of EVs.

Most of the drop in oil use comes from electric light-duty vehicles (LDVs), like passenger cars. These made up 80% of the oil savings in 2024 and are expected to account for around 77% in 2030.