Welcome to Binary Circuits’ 20th weekly edition

Your weekly guide to most important developments in technological world

Dear Readers,

Binary Circuit investigates trends, technology, and how organizations might profit from rapid innovation. GreenLight, the brain behind Binary Circuit, finds possibilities, analyzes challenges, and develops plans to fit and grow firms to stay ahead. Looking to scale your business, partners, or AI/technology integration.

This week, we discuss how AI rapidly transforms science, transportation, and cybersecurity, and how global talent competition is taking center stage.

Let’s dive in.

What should we look forward to when AI steps into the role of a scientist?

With the integration of AI, science is becoming faster and more automated daily. For example, this week, Anthropic launched an AI for Science program targeting scientific problems in the fields of biology and life sciences.

AI adoption in healthcare is accelerating. Take Alibaba’s Damo PANDA, an AI tool that secured a “breakthrough device” designation to detect pancreatic cancer.

It surpassed trained radiologists with 34.1% higher sensitivity and 99.9% specificity, flagging only one false positive in 1000 tests. In quantum physics, Mario Krenn's Melvin, an AI system, doesn’t just perform experiments—it imagines them.

AI tools that were once used as a support are now becoming a core of scientific processes, suggesting hypotheses, designing experiments, predicting results, and even writing papers. Recently, an AI system named "The AI Scientist-v2" independently wrote a research paper that passed peer review at an ICLR workshop.

Slowly and gradually, the concept of an AI co-scientist is becoming prevalent in scientific research. Generative AI is undoubtedly helping researchers boost productivity. The MIT study also confirmed AI helped discover 44% more materials and file 39% more patents.

But it also forces us to consider what kind of science we seek. Do researchers feel the real joy and sense of creativity? Or is the research becoming more mechanical, just validating machine-generated ideas without crafting one’s own?

If AI begins to choose the questions and find the answers, science risks becoming less disruptive and driven by patterns rather than human insight.

Defining what truly matters may be the final feat where human scientists still hold the edge.

Autonomous vehicles may soon be more than just experimental models

Autonomous vehicles have faced numerous challenges over the years, such as edge cases, sensor reliability, cybersecurity concerns, and regulatory limitations. However, new advancements and bold strategies are shifting the outlook of the entire industry.

Tesla has consistently been at the forefront of autonomous driving and is planning to launch its cybercab before 2027, while Amazon’s self-driving startup, Zoox, aims to scale up production in 2026, accelerating the commercial robotaxi rollout across the U.S.

Additionally, Aurora has begun operating fully autonomous Class 8 tractor trailers, completing over 1200 miles. A few months ago, Uber and Lyft also integrated autonomous vehicle features into their apps.

Companies are now working hard to expand their services. Uber has launched robotaxi services with WeRide in Abu Dhabi and plans to expand to 15 cities, including Europe. In the U.S., its Waymo partnership is scaling from Austin to Atlanta, which clearly shows signs of momentum in real-world deployment.

What’s driving the optimism around autonomous vehicles?

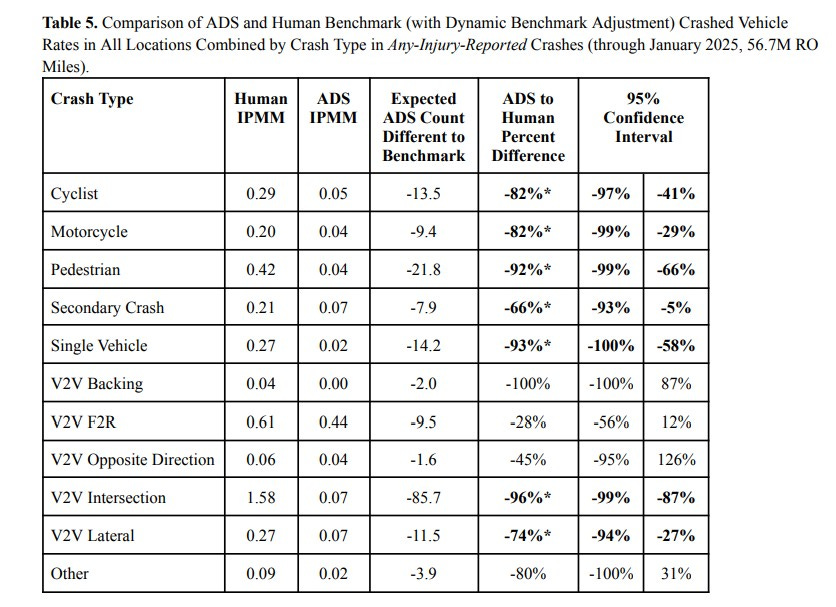

Safety concerns once held back self-driving cars. But now, new safety data is shifting the narrative. Waymo’s fully autonomous vehicles have logged 56.7 million miles across U.S. cities.

The results?

- 92% fewer pedestrian injuries

- 82% fewer cyclist injuries

- 96% fewer crashes at intersections

Data suggests autonomous cars may outperform human drivers in safety. The question is shifting from whether they will become common to when they will become pervasive.

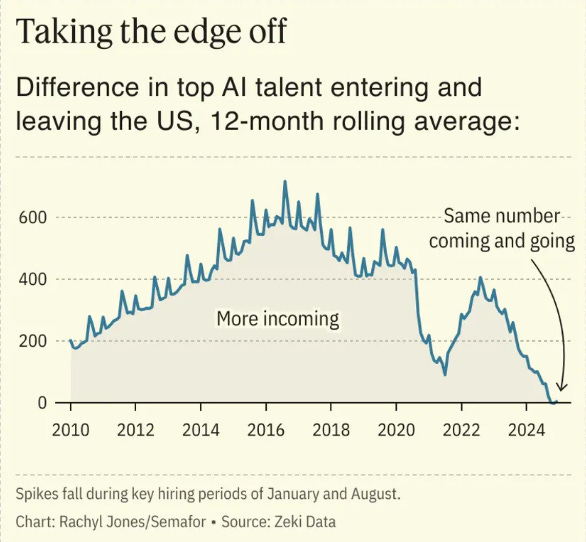

AI-talent will dictate technological leadership amid fierce competition.

In today's technological superiority-driven geopolitical landscape, talent acquisition is more important than ever before, besides economic and military strength. The ability to attract, develop, and retain AI talent is now the ultimate strategic advantage, shaping the future of global leadership.

The U.S. has long attracted top tech talent, but this is changing. Overall job numbers remain strong, but the IT sector has recently lost over 214,000 tech jobs in the U.S. This reflects a more profound transformation in the tech workforce.

AI expertise is now more critical than traditional IT roles, and the race to control this talent is intensifying.

The future belongs to those who control AI talent. If the U.S. fails to rethink its strategy, it risks losing skilled professionals and its position as a technological leader in an AI-driven world.

China has established a formidable AI talent pipeline. Increasingly, researchers trained in the U.S. are returning to China, reinforcing its AI ecosystem. The U.S. no longer guarantees keeping the best minds and now competes with nations investing heavily to retain homegrown talent.

Meanwhile, other players such as the UAE, Saudi Arabia, and India are also enhancing their AI industries, establishing themselves as serious contenders.

The U.S. must act fast to retain its competitive edge. Immigration policies must evolve, but domestic AI education must be strengthened from the ground up. Investing in K-12 STEM programs and research infrastructure will be vital.

The erosion of the U.S. talent advantage could lead to an irreversible shift in the balance of power.

AI’s next frontier is to gain public trust

As AI becomes integral to daily life, people are asking important questions about its responsible development and use.

Public opinion is shifting towards AI that is transparent, accountable, and aligned with human values.

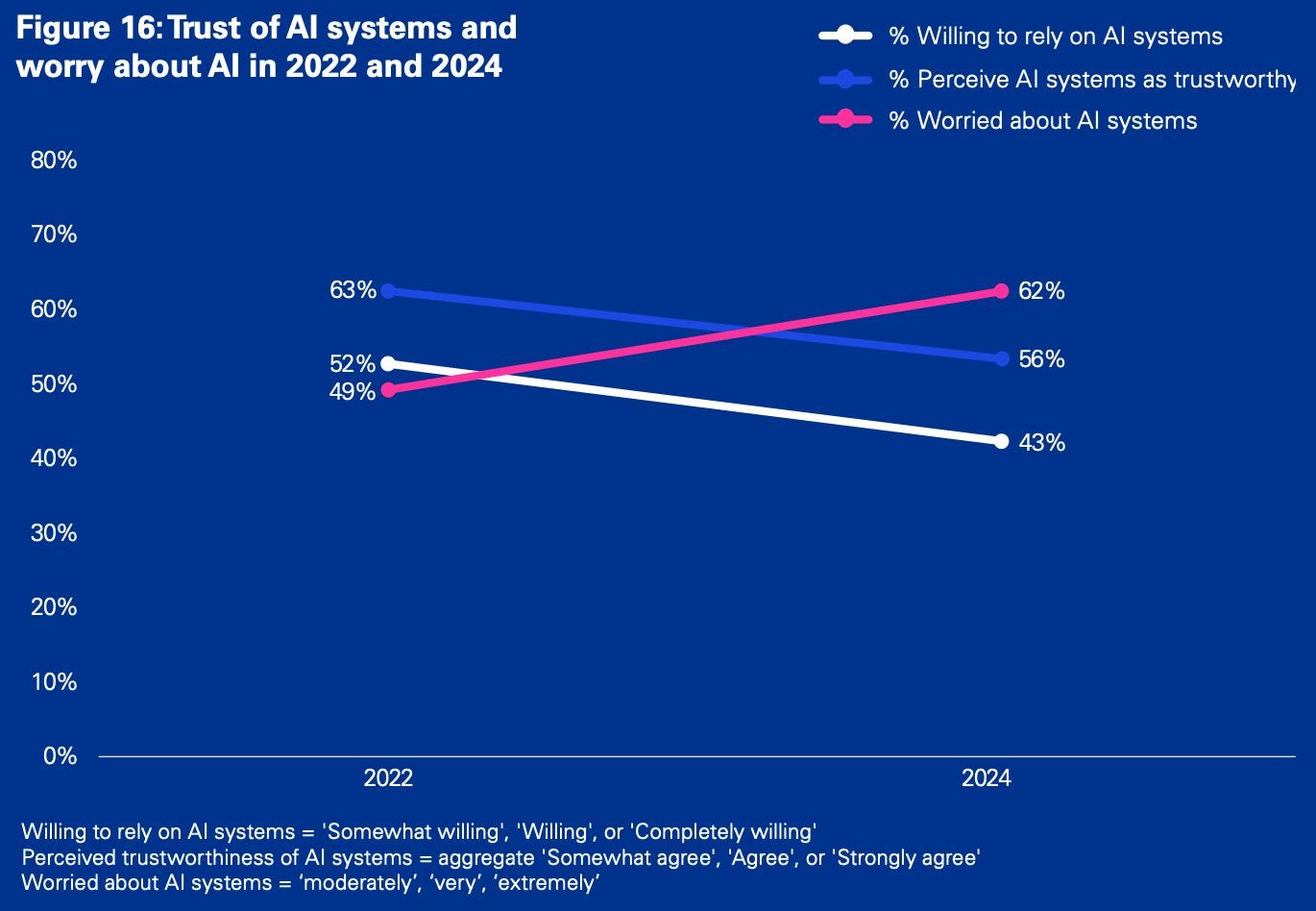

Society seeks proof of AI's benefits, not just admiration. A recent KPMG study across 17 countries shows trust in AI dropped from 63% in 2022 to 56% in 2024.

Concerns about AI are rising. In 2022, 49% were worried about it, growing to 62% by 2024. Brazil, the Netherlands, and Finland had significant increases in concern. This indicates that AI is evaluated by its effectiveness and purpose.

At the same time, reliance on AI tools has declined. Two years ago, 52% of people regularly used AI, but now that number is down to 43%. In Japan, usage has dropped from 43% to 21%, and in Brazil, from 67% to 53%.

This indicates increased awareness of its strengths and weaknesses. People expect AI systems to earn their trust.

This shift also means people are more informed.

South Korea exemplifies how trust in AI can grow through thoughtful interaction. Due to domestic innovation and responsible policies, excitement about AI increased from 57% to 75%.

This is a pivotal moment for businesses, developers, and governments. People see themselves as active participants with real expectations. AI's next stage depends not only on technical progress but also on meeting people's needs.

To build public confidence, companies must make AI easier to understand, involve communities in decisions, and create stronger safety measures.

Concern should be welcomed as a signal that society is ready to take AI seriously.

Open-source AI is changing cybersecurity. What must security leaders know?

Open-source large language models are transforming cybersecurity from a fragmented, vendor-driven market into a collaborative ecosystem, providing cost-effective, scalable, and specialized defenses against machine-scale threats.

The RSAC 2025 event showcased a strategic move toward open-source LLMs tailored for cybersecurity.

Few highlights:

Cisco’s Foundation-sec-8B model, built on Meta’s Llama 3.1 architecture, integrates vast curated datasets to real-world incident summaries. The model’s performance stands out. The model achieves high accuracy on benchmarks like CTI-MCQA and CTI-RCM while requiring less hardware. Its open-weight design, licensed under Apache 2.0, allows enterprises to customize and extend without vendor lock-in.

Meta advanced the open-source agenda with its AI Defenders Suite. It now includes:

LlamaFirewall: a real-time AI security stack designed to detect prompt injections, monitor agent behavior, and inspect generated code.

PromptGuard 2: updated with high-accuracy, lightweight models for detecting policy violations.

CyberSec Eval 4: developed in collaboration with CrowdStrike, this suite evaluates AI under real-world SOC conditions and enables autonomous patching.

ProjectDiscovery was awarded the “Most Innovative Startup” in the Innovation Sandbox at RSAC 2025 for its emphasis on open-source cybersecurity. Its main product, Nuclei, is a tool that rapidly identifies security weaknesses in APIs, websites, cloud systems, and networks.

What are the strategic implications for security leaders?

Contribute to or adopt open-source cybersecurity tools.

Use or fine-tune AI models trained specifically on cybersecurity datasets rather than general-purpose LLMs.

Utilize frameworks to test model performance in SOC-like conditions before deployment.

Deploy efficient models that run on modest hardware (e.g., 1–2 GPUs) without compromising performance.

Apply AI to use cases such as code reviews, threat detection, incident response, and policy enforcement.

Cybersecurity leaders are shifting from competition to coordination by adopting open-source models and shared infrastructure.

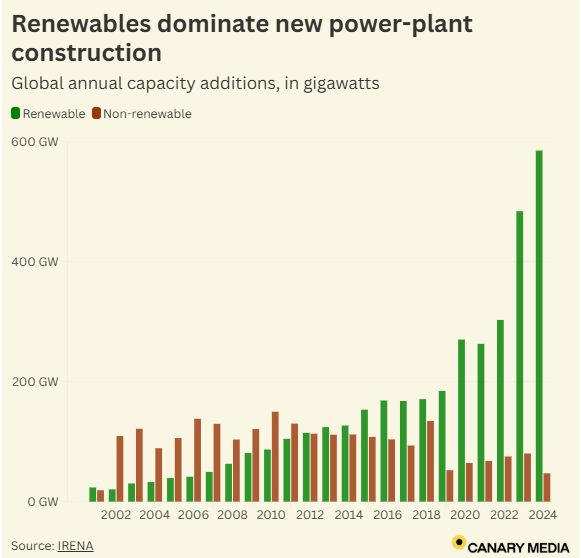

Chart of the week:

Clean energy was the leading new power generation source in 2024.

The International Renewable Energy Agency (IRENA) reports that over 90% of new energy capacity in 2024 came from renewables. Driven by declining costs and ambitious decarbonization policies, clean energy has expanded global power systems for years, with renewables consistently making up over half of new energy generation integrated into grids since 2012.

Sound bites you should know:

Apple and Anthropic partnered on AI-powered “vibe-coding” platforms. Xcode receives a generative AI upgrade through Claude Sonnet, designed to write, edit, and test code with minimal human input. What does the future of software coding look like?

ABB Robotics and BurgerBots launched an automated kitchen in California with collaborative robots like FlexPicker and YuMi, promising a meal in 27 seconds. Is this the tipping point for fast-food automation?

Huawei is developing the Ascend 910D chip to rival Nvidia’s H100, pursuing Chinese partners due to U.S. export restrictions. Can domestic innovation bridge the technological gap quickly?