Welcome to Binary Circuits’ 13th weekly edition

Your weekly guide to most important developments in technological world

Dear Readers,

This week in Binary Circuit, we are discussing the key takeaways from NVIDIA’s yearly Global Technology Conference, which showcased new tools that will accelerate the shift toward accelerated computing and AI development and give it a physical shape.

Let’s dive in.

NVIDIA’s GTC provides a glimpse of an AI-driven future. What are the new hardware and software updates, and why do they matter?

NVIDIA's annual GPU Technology Conference (GTC) is a hub for advancements in accelerated computing and AI. This year’s GTC, deemed the "Super Bowl of AI," showcased CEO Jensen Huang's breakthroughs that are set to transform the industry. Described as an "inflection point" in computing, it highlighted advancements in AI performance, open-source tools, hardware breakthroughs, and strategic collaborations reshaping life and business. (watch the keynote here)

This blog post explores key takeaways and their implications for future AI development.

Huang noted that AI's capabilities have reached a turning point. Beyond detecting images and writing text, AI is evolving into an "agentic AI" that can reason and make decisions. Consequently, processing power demand has surged. The company anticipates data center revenue to hit $1 trillion by 2028 to meet GPU demand from major cloud providers.

New Hardware Takes Center Stage. Better GPUs with greater memory are arriving soon, enabling larger AI models and more complex workloads.

The current Blackwell AI platform is in full production, and Blackwell Ultra will arrive in late 2025. The NVIDIA Blackwell Ultra platform connects 36 Grace CPUs and 72 Ultra GPUs. It features 5th-generation Tensor Cores and supports new low-precision types like FP4, enabling over 1,000 TOPS of AI inference per chip.

Huang announced Nvidia's next-gen "Rubin" chips. Named after the astronomer, they are expected to ship next year. The Rubin platform will feature a new CPU and improved GPUs from Nvidia. This new platform has been nearly entirely redesigned for speed and efficiency. These chips are anticipated to enhance performance per dollar, reducing AI processing costs.

Nvidia will release "Rubin Ultra" super chips in 2027. Huang promised better performance in the 2027 Rubin Ultra. According to Nvidia, the forthcoming Rubin Ultra system NVL576 could be 14 times more powerful than top Blackwell systems. This entails adding GPU chips to a server rack and increasing power and cooling.

NVIDIA aims to democratize AI with DGX Spark and DGX Station, termed "personal AI supercomputers." The compact DGX Spark, featuring the GB10 Grace Blackwell Superchip, offers up to 1 PFLOP for model fine-tuning and inference. The Grace-Blackwell architecture enhances the DGX Station, providing data center-level AI capabilities to individual researchers and developers.

NVIDIA announced the RTX Pro Blackwell GPU series for creators and professional workstations. These cards handle demanding AI-driven workflows, 3D design, simulation, and advanced graphics tasks.

Nvidia's Spectrum-X Photonics networking switches enable massive data transfers in AI factories, showing co-packaged optics for the scalability of millions of GPUs. This technology combines electronic circuits with optical pathways, establishing extensive AI infrastructure across multiple locations while reducing energy consumption and costs.

Spectrum-X delivers improvements such as 3.5 times greater power efficiency, 63 times better signal integrity, 10 times enhanced network resiliency, and 1.3 times quicker deployment. These advancements are essential for tackling scalability challenges in building AI infrastructure and ensuring smooth communication and data flow among processors necessary for training complex AI models.

Memory storage needs complete reinvention for AI. Huang pointed out that memory storage is transitioning to semantic-based retrieval systems that can comprehend and deliver knowledge rather than merely raw data. Semantic-based retrieval will facilitate more efficient and intelligent data access for AI workloads, where grasping the context and significance of data is essential. NVIDIA is collaborating with the entire storage industry to provide GPU-accelerated storage stacks to address future AI data storage requirements.

Software and platforms shape the ecosystem of applied intelligence.

NVIDIA paired its hardware advances with a suite of new and improved software tools and platforms designed to accelerate AI development and implementation. Managing AI models and data requires more data centers (or "factories"). Huang saw a future where every company had two factories: one for regular goods and one for AI. AI factories are advanced cloud data centers that teach and offer AI services.

One of the most significant announcements was NVIDIA Dynamo, a new open-source inference tool designed to be the "operating system" for AI factories. Dynamo distributes huge AI reasoning models across hundreds of GPUs, using split serving to boost throughput and reduce latency. NVIDIA claims that Dynamo is the "most efficient solution for scaling test-time compute" and that it can dramatically increase inference throughput while reducing response times and costs.

Another significant breakthrough was the open-source release of cuOpt, NVIDIA's GPU-accelerated decision optimization engine. CuOpt solves tough routing and resource allocation problems in real-time by dynamically examining billions of variables to offer optimal solutions. With major operations research players currently using or considering open-source cuOpt, NVIDIA intends to build an ecosystem around real-time optimization.

Jensen Huang introduced the new open-source reasoning AI models known as the NVIDIA Llama Nemotron family. These models, built on Meta's Llama architecture and post-trained by NVIDIA, excel at tough, multi-step reasoning, code production, and decision-making. NVIDIA's open reasoning model outperforms others, with up to 20% higher accuracy and 5x faster inference.

NVIDIA introduced agentic AI technologies in its AI Enterprise suite to aid intelligent agents. NVIDIA Agent IQ is an open-source toolkit for transparent reasoning and behavior optimization, and the NVIDIA AI-Q Blueprint standard connects knowledge bases to AI agents. New NIM microservices include model serving, continuing education, and real-time adaptation.

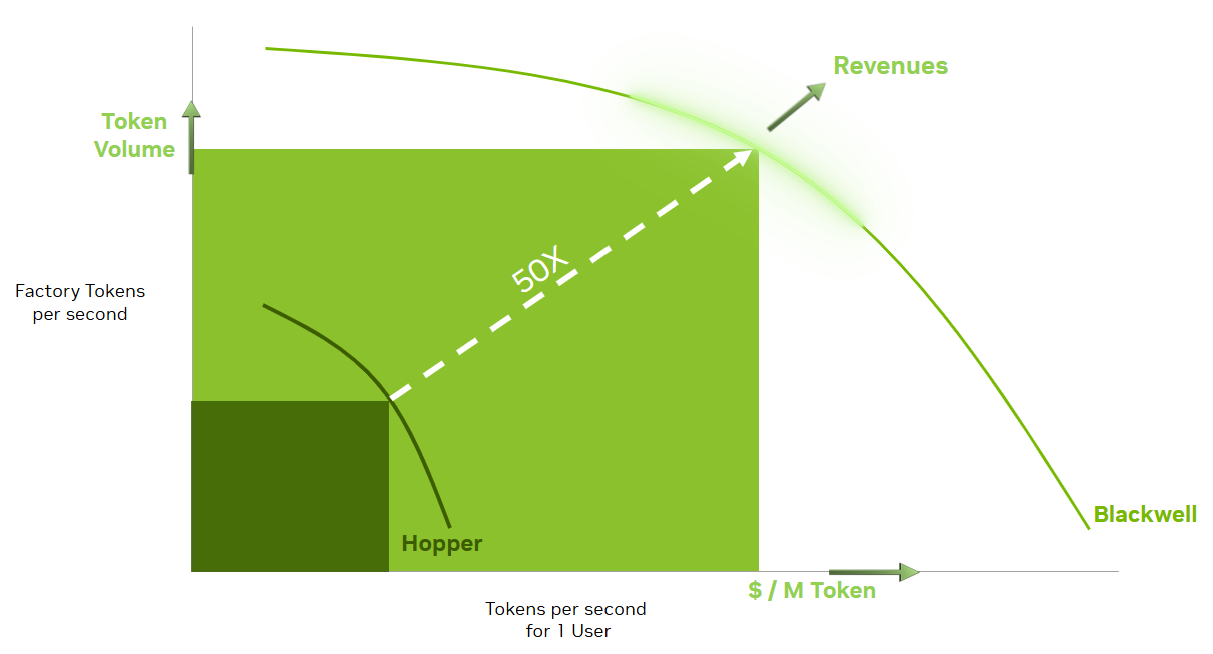

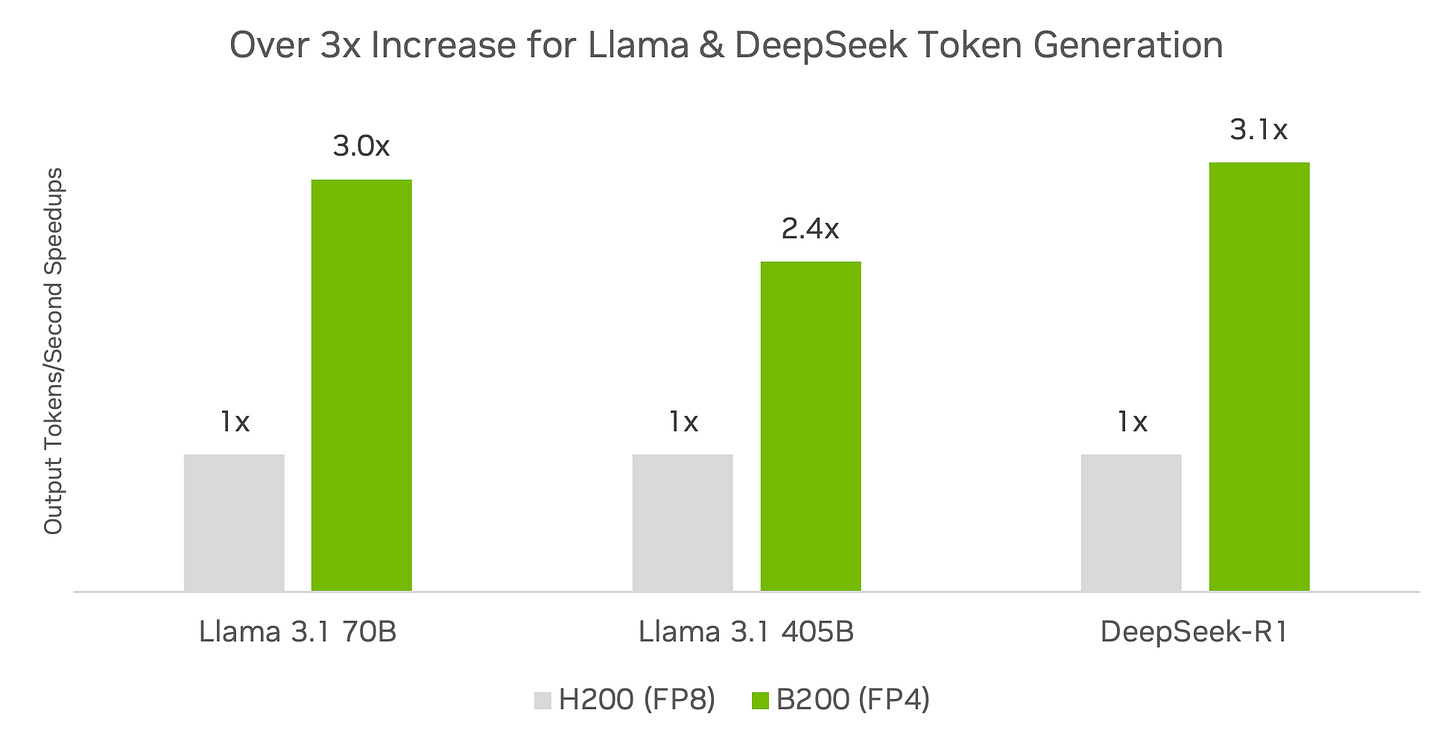

NVIDIA launched DGX Cloud Benchmarking, a suite of tools for optimizing AI workloads in the cloud. NVIDIA notes that on popular models like DeepSeek-R1, Llama 3.1 405B, and Llama 3.3 70B on the DGX B200 platform with TensorRT in FP4 precision, they achieve over 3x more inference throughput than the DGX H200 platform.

NVIDIA also showcased the NVIDIA Omniverse Blueprint for AI factories, which includes templates and simulation tools for designing and optimizing huge AI data centers in a virtual environment prior to actual construction.

Additionally, NVIDIA Cosmos provides world-foundation models for robotics training in sophisticated simulation environments.

Robotics is the next big AI frontier.

Huang stated, "The age of generalist robotics is here,” predicting that "physical AI" robotics will be the next trillion-dollar industry. Robots are promising in manufacturing, healthcare, warehouses, and more. Nvidia wants brains and tools for robots.

Isaac GR00T N1, the first open, adjustable foundation AI model for general-purpose humanoid robots, was a category standout. Developers can use this massive AI model (think "brain") to create intelligent robots that can perform numerous functions.

Isaac GR00T N1 will train Nvidia's upgraded Cosmos simulation models to produce humanoid robots, as reported by the Associated Press. Experts believe that this open platform may allow large tech companies, academic researchers, and smaller labs to train robots in new skills using simulated data.

Watch the video here.

Nvidia introduced Cosmos environment models alongside its robot "brain." Cosmos creates complex virtual worlds using Omniverse 3D technology for customizable photorealism. Why does this matter? AI models can learn physics, navigation, and problem-solving by simulating countless environments, like how robots learn by experience.

Huang showcased that Nvidia can generate unlimited, controlled training data with Omniverse and Cosmos. This allows robots to practice in a virtual sandbox more quickly than relying on human-collected data, marking a milestone in synthetic data production for AI training.

Nvidia launched Project Newton, an open-source robotics physics engine. Newton, created with Google DeepMind and Disney Research, will precisely simulate gravity, friction, and object interactions for AI training. This makes virtual-world-trained robots behave authentically in real life. "Blue," a boxy robot, concluded the robotics section with live performances, beeping and booping at Huang. This impressive exhibit showcased the advancements in practical robots.

Other important announcements included:

Self-driving car partnership: Nvidia partnered with GM to develop autonomous driving and smart factory AI technologies.

Safety technology for autonomous vehicles: Nvidia unveiled Halos, a full-stack safety technology for autonomous vehicles, covering sensor data processing, driving policy AI, and redundant fail-safes.

Quantum computing initiatives: Nvidia organized its first “Quantum Day” at GTC 2025, unveiled a hybrid quantum-classical computing platform, and opened a quantum research facility.

What does it mean for the future of AI? Huang's claim that AI compute demand has surged 100x in a year suggests larger, more powerful AI systems. Nvidia’s GTC 2025 reveals a future where AI advances rapidly, driven by powerful GPUs and software.

Smarter AI assistants will take charge of complex workflows, enabling massive gains across economic sectors. Breakthroughs in robotics, cloud computing, and quantum computing aim to democratize AI, making it accessible to businesses and individuals while addressing challenges like energy efficiency and AI ethics.

Expect smarter AI agents. With higher inference efficiency, conversational AI, real-time translation, and recommendation engines will run faster and cheaper. This means more responsive AI-powered services for customers, such as voice assistants that feel more human and understand context better or streaming services that swiftly customize content. AI will become more common in everyday products and services, even in companies without large AI infrastructures.

AI workloads could expand 10x or 100x in a few years. Progress is impressive. By the late 2020s, autonomous machines, real-time simulations, and advanced AI models might be common. AI could instantly interpret long discussions or simulate complex scenarios.

Competitiveness will rise with aggressive progress. Nvidia leads, while AMD and other AI processor giants (Google's TPUs, Amazon's Trainium) are investing to remain competitive. AI hardware competition fosters faster technological advancements, benefiting all. However, if a few corporations dominate the market, it could result in power consolidation. As competition grows, consumers can anticipate stronger, more affordable AI technology.

Energy and infrastructure difficulties arise with so much processing capacity. Nvidia predicts future systems will need more power and cooling. Data centers may need renewable energy and improved cooling technology to develop AI. We must consider sustainability as AI grows.

Chart of the week:

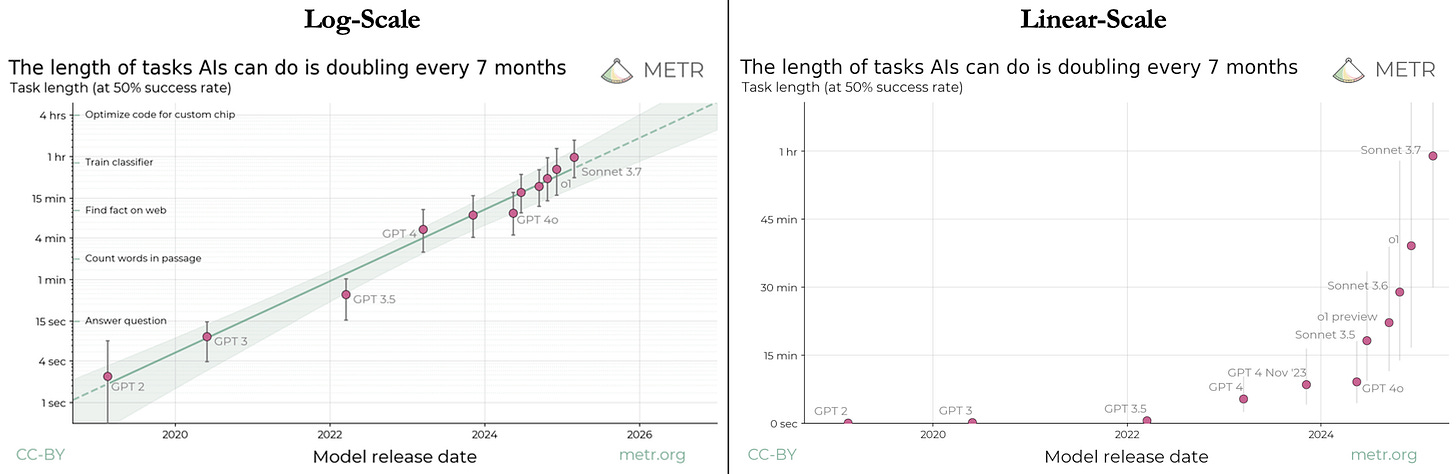

The length of tasks AI agents can complete autonomously has been doubling every 7 months for the past 6 years. A new metric, “task-completion time horizon,” developed by METR, an evaluator of frontier AI models, measures how long it takes AI models to complete tasks at a 50% success rate.

The study predicts that in five years, AI agents will perform many tasks that currently take humans days or weeks. The findings emphasize measuring AI performance by task length to better forecast and understand real-world impact.