Welcome to Binary Circuits’ 12th weekly edition

Your weekly guide to most important developments in technological world

Dear Readers,

Welcome to this week's Binary Circuit. This week, we'll review some incredible technological breakthroughs that are shaping the future. The pace of progress is amazing, with breakthrough interfaces bridging the gap between brain and machine, powerful AI agents automating complex jobs, and innovative energy solutions transforming waste into useful resources. We also discuss the ongoing significance of a distinctively human trait—intuition—in navigating this quickly changing technological and business terrain.

Let’s dive in.

China’s Manus AI agent provides a glimpse into an autonomous future

General-purpose AI agent Manus AI can independently schedule and complete a variety of tasks, including travel booking and stock analysis and execution. Using a multi-agent architecture that functions like an executive supervising specialized sub-agents to break down difficult tasks into reasonable steps, it runs as an AI agent system capable of functioning autonomously and asynchronously.

Manus lays software around current strong models like “Claude Sonnet 3.5” and “Qwen,” not depending on a proprietary model. It can interact successfully with the digital environment by combining several technologies, including web browsers, shell executors, and file reading capabilities.

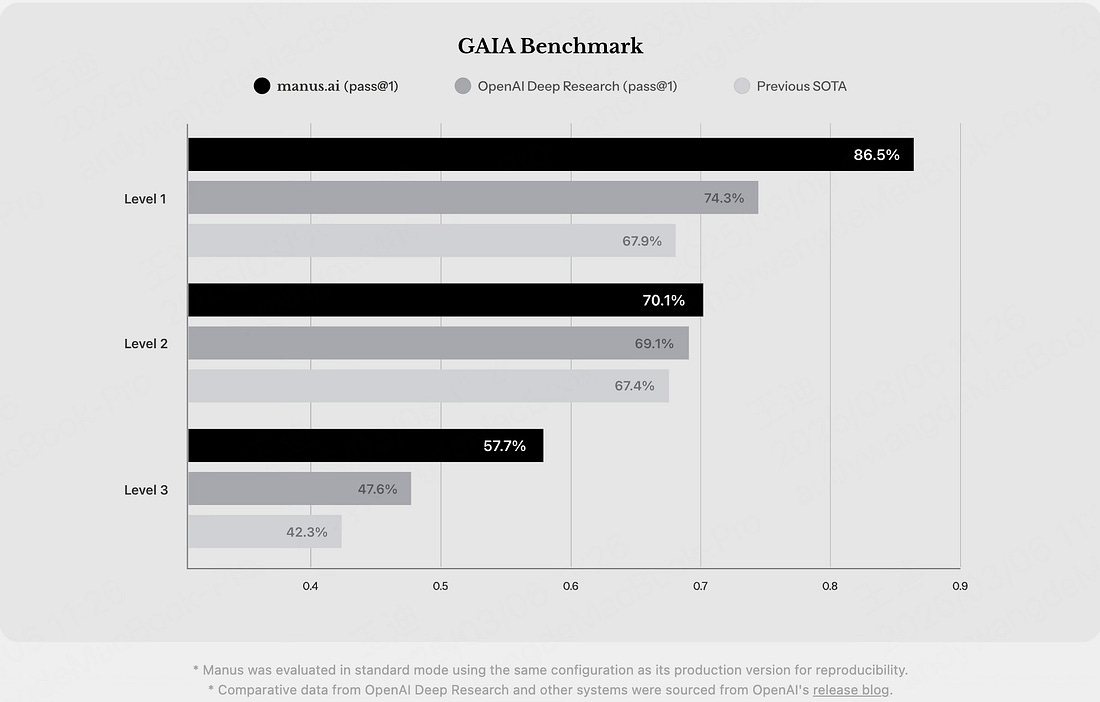

Early tests and user assessments point to Manus’s remarkable performance—even surpassing OpenAI’s Deep Research on some jobs. From study summarizing and comparative product analysis to creating content and retro-style games, its possible applications are many.

Manus is a powerful sign of where artificial intelligence is headed: a time when more autonomous AI assistants will abound. Industry experts have paid great attention to it and have complimented its capacity to do jobs more efficiently than comparable products like OpenAI's Operator or Anthropic's Computer Use.

The advent of Manus and other AI agents has various significant ramifications, including the automation of employment, concentration on product and engineering, competitiveness and innovation, safety, liability and regulation, impact on the job market, and worldwide competition. Manus AI is still in beta, but its remarkable powers act as a sobering reminder that the age of autonomous AI agents is fast here.

Two breakthrough developments mark a milestone in brain-computer interface research

Rapidly evolving brain-computer interfaces (BCIs) hold great potential to transform human interaction with technology and recover lost capabilities. Recent developments in both non-invasive brain-to-text decoding and two-way adaptive neural interfaces illustrate the fascinating advancement being made in Meta AI as well as in Tianjin University and Tsinghua University in China.

Designed to decode sentences from brain activity recorded by electroencephalography (EEG) and magnetoencephalography (MEG), Meta’s Brain2Qwerty, a deep learning architecture, closes the performance gap between non-invasive and invasive BCI approaches.

MEG outperformed EEG with a striking 32% average character-error-rate (CER) compared to EEG's 67%. Top MEG participants achieved an amazing 19% CER, with the model able to decode phrases beyond the training set.

It demonstrates that non-invasive BCIs can approach the accuracy of invasive implants and enable safer communication solutions for non-communicative patients.

China's researchers have revealed the first two-way adaptive brain-computer interface (BCI) ever published, using a memristor-based neuromorphic decoder to achieve a 100-fold boost in efficiency and four degrees of freedom in movement control. The system’s dual-loop feedback method consists of a user feedback loop enabling users to gradually refine their thought patterns over time, hence boosting control and flexibility, and a machine learning loop continuously updating the brain wave decoder. This mutual adaptation between human cognition and machine intelligence is a basic first step toward brain-computer co-evolution.

This BCI's improved control features highlight its accuracy by enabling the simple operation of virtual drones with precisely tuned motions. It also promises flawless integration with external devices driven by artificial intelligence, hence improving user experience.

The adaptive character of the system makes the lower cognitive load possible, thereby making the system perhaps accessible to people with different cognitive capacities. These developments open the path for complex uses, including enhanced prostheses, human-machine symbiosis, and medical rehabilitation.

These discoveries complement other global BCI advances including Neuralink's implanted BCIs for thought-controlled device control and Precision Neuroscience's minimally invasive BCIs. China's regulatory developments seek to hasten the growth and support high-quality BCI industry innovations.

Microsoft's Magma enables intelligent AI agents to operate in digital and physical environments

Microsoft Research introduced Magma, a foundation model for multimodal AI agents that perceives the world and performs goal-driven tasks across digital and physical contexts. Magma addresses the limitations of existing AI models in adapting to various environments.

Magma seeks to be a single foundation model able to adapt to new activities in both digital and physical environments, therefore allowing AI-powered assistants and robots to see their surroundings and propose suitable actions.

Magma uses Set-of-Mark (SoM) to designate actionable visual items in images (like clickable buttons) and Trace-of-Mark (ToM) to monitor object movements in movies. These methods teach Magma spatial intelligence from enormous training data and help create meaningful actions from visual and verbal inputs. Tokenized texts and shared-vision encoded images/videos are input into a large language model (LLM) to generate verbal, spatial, and action outputs.

Magma can improve AI assistants for step-by-step navigation and UI automation. A deeper understanding of real-world objects can lead to more adaptable robots. From healthcare to industrial automation, Magma’s multimodal intelligence has the potential to redefine collaboration between humans and AI interaction.

Magma is a major step towards a future when AI really understands and interacts with the complexity of our environment.

Batteries from nuclear waste could solve many challenges in digitally connecting remote assets

Ohio State University has created a revolutionary nuclear battery that can generate electricity using radioactive atomic waste products. This creative technique can power small appliances while solving the major problems with nuclear waste management.

Like rooftop solar panels, the battery runs on solar cells (photovoltaic cells), which translate light into electricity, and scintillator crystals, which are uniquely able to emit light when subjected to radiation.

The study team tested its prototype, which is roughly the size of a four-cubic-centimeter cube, using two typical radioactive isotopes present in spent nuclear waste: cesium-137 and cobalt-60. The findings were hopeful: The battery developed 288 nanowatts of electricity when run on cesium-137.

Especially in environments like nuclear waste storage facilities, deep-sea research, and even space missions where high levels of radiation are already present, this technology offers a potential way to transform a problem into a useful resource. Importantly, the battery is safe to handle even though it utilizes surrounding gamma radiation, as it does not contain any radioactive materials.

Before the technique can be generally adopted, though, various difficulties still exist, including growing power output, minimization, durability, safety, and regulatory approval. Notwithstanding these obstacles, the researchers have great hope for the future. They think the nuclear battery idea will establish a significant place in the sensing sector as well as energy generation.

The development of a nuclear waste-powered battery is an important step toward sustainable nuclear energy. This innovation offers long-lasting, maintenance-free power, showing that one person's waste can be another's resource.

Intuition, often regarded as a gut feeling, provides leaders with a crucial advantage in a rapidly changing world

Intuition is a form of subconscious processing grounded in our past experiences and insights, not a magical phenomenon. Science indicates that the brain unconsciously processes knowledge far faster than our conscious thinking, resulting in more informed decisions.

Studies have indicated that intuition is especially useful when handling complicated data or making snap judgments. People who trusted their intuition experienced more life happiness, inventiveness, and self-awareness; they also solved challenging tasks faster and more precisely. Moreover, those performing well on intuition tests have been demonstrated to make better investing choices.

Historical figures like Phil Knight, the creator of Nike, Jensen Huang of NVIDIA, Steve Jobs, Oprah Winfrey, and Albert Einstein have all acknowledged their intuition for watershed moments.

System 1 Thinking, which is fast, instinctive, and emotional—the domain of gut feelings and snap judgments—has become increasingly important in today's fast-changing AI world. Leaders negotiating AI-driven sectors must foresee changes before they become clear and react quickly, depending on their natural awareness of the terrain.

Conscious activities such as cultivating presence, listening to your body, practicing self-reflection, trusting the universe, and believing in your first impressions will help you develop your intuitive edge.

When others hesitate, visionary leaders often take big risks, knowing that waiting for perfect conditions or complete data could mean losing important chances. Leaders who learn to maximize and trust their natural edge will be able to negotiate uncertainty, inspire creativity, and finally propel transforming success.

Chart of the week:

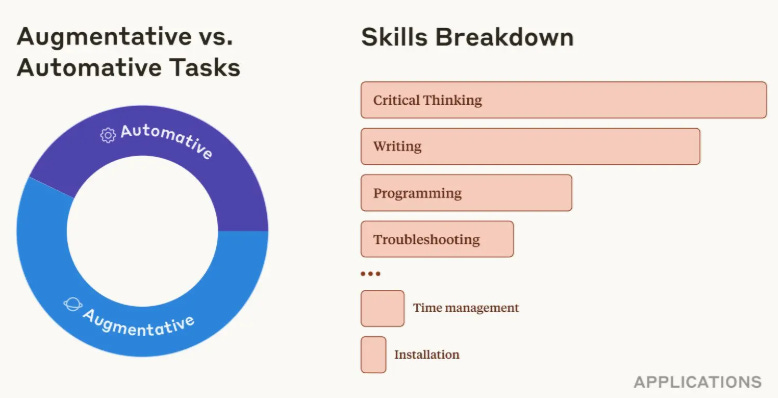

How soon will AI augmentation give way to complete automation?

AI is currently used to support professionals rather than replace them.

The Anthropic Economic Index shows that 57% of AI usage is for augmentation, where AI assists humans with tasks. Complete automation, where AI handles tasks with minimal human input, is at 43%.

AI agents’ quick development for niche applications at a lower cost could soon swap the equation.

Sound bites you should know:

Google's newly launched open-source AI, Gemma 3, is designed for efficiency, boasting a 128K context window and the ability to run on a single GPU. It can even run locally on a smartphone.

Robot-assisted surgeries are on the rise! 22% of surgeries now use robots, offering less bleeding, pain, and recovery time. Advances in surgery are coming.

OpenAI's new platform enables companies to boost productivity by creating custom AI bots for different business uses. AI agents at a factory scale?

Google's Gemini Robotics uses AI to let robots comprehend, interact with, and manage things like humans. It controls industrial arms and humanoid assistants using vision, language, and action, a crucial step toward general-purpose robots. AI is becoming physical.

Microplastic pollution is damaging plants’ ability to photosynthesize, leading to crop loss and potential harm to the planet. What's the true cost of our plastic addiction?