Binary Circuit - February 21, 2025

Your weekly guide to most important developments in technological world

Binary Circuit investigates trends, technology, and how organizations might profit from rapid innovation. GreenLight, brain behind Binary Circuit, finds possibilities, analyzes challenges, and develops plans to fit and grow firms to stay ahead. Looking to scale your business, partners, or AI/technology integration, schedule a free discovery call:

Welcome to Binary Circuits’ 9th weekly edition

This week's must-know developments:

Great AI debate: Raw Computational Power vs. Efficiency. Who wins?

Virtualization is imperative: AR/VR is taking a leap forward!

Overwhelmed with Innovation? Steps to navigating the AI tsunami.

Let’s dive in!

Binary Circuit is a reader-supported publication.

To receive new posts and support our work,

consider becoming a subscriber.

More computational power vs. engineering and algorithmic efficiency. The race to build superior AI models. What’s more important?

A simple equation has long driven the AI race: more computing = better models. DeepSeek-V3 questions this hypothesis. The DeepSeek-V3 model proved that improved algorithms and efficiency could be just as crucial as actual computer capacity.

DeepSeek-V3 attained competitive performance without the same degree of computational capability as Western leaders, including OpenAI, Anthropic, and Google DeepMind. DeepSeek emphasizes more efficient architectures that maximize how models process data, better training strategies to squeeze more performance from fewer resources, and intelligent use to boost learning efficiency instead of depending on brute-force scaling.

This begs a troubling issue for Western AI companies: what if more computation isn't necessarily the solution? Is scaling computing still the dominant strategy, or are we reaching a turning point where algorithmic improvements matter more?

We believe that while efficiency gains are making strides and will play a significant role, the reality is that no one has enough computing yet to realize AI's potential fully.

Case in point: Elon Musk, a genius of our times in optimization and breakthrough technologies, is betting on more computational power.

Elon Musk’s xAI is building one of the most powerful AI supercomputers in the world—the Colossus Computer. Musk believes that AI models are fundamentally constrained by computing power, and to stay competitive with OpenAI, Google, and DeepMind, xAI needs massive GPU resources to train Grok-3 and beyond.

His argument? Even if efficiency gains matter, the most significant breakthroughs will still require unprecedented computing power. We tend to agree based on the evidence.

The Colossus Computer is an engineering marvel. Musk and his team took an unprecedented short period of 122 days to install 100,000 H100s and then added the same number in just 92 days. The target is to install one million GPUs. Musk started training Grok-3 as soon as they installed the first GPU rack on the 19th day, a feat that only he could have pulled off.

Colossus utilizes advanced liquid cooling solutions, including cold plates and coolant distribution units, to manage the immense heat generated by the GPUs. It's the largest liquid-cooled GPU cluster deployment in the world.

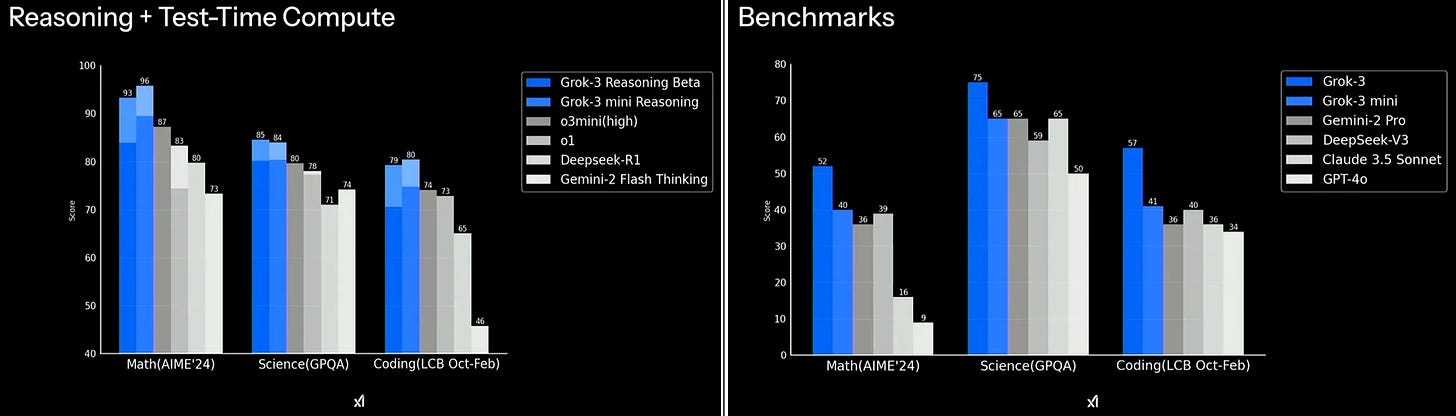

The result: xAI launched Grok-3 this week, which used 200 million GPU hours for training—10x-15x more than its predecessor, Grok-2. Elon Musk referred to Grok-3 as “scary smart.”

Key data points about Grok-3:

Benchmark Performance: Early reports suggest that Grok-3 outperforms leading models like OpenAI's GPT-4o and DeepSeek's V3 in areas such as mathematics, science, and coding, though independent verification is pending.

Enhanced Reasoning Capabilities: Incorporates advanced reasoning modes, such as "Think" and "Big Brain," enabling the model to tackle complex, multi-step problems by allocating additional computational resources.

Deep Search Functionality: Introduces a next-generation search engine that retrieves information and provides detailed explanations of its reasoning process, enhancing transparency and user trust.

However, it’s not just the brute force. xAI has improved Grok 3’s capabilities beyond increased computing power. xAI incorporated:

Synthetic datasets, artificially generated, simulate various scenarios and improve learning efficiency while addressing data privacy concerns.

Self-correction mechanisms allow models to identify and correct mistakes by comparing outputs with known correct responses, reduce errors, and improve accuracy.

Reinforcement learning, a type of machine learning, trains models to maximize positive outcomes through trial and error, enhancing decision-making.

xAI also integrated human feedback loops and contextual training to reduce hallucinations and produce natural human-like responses. Contextual training teaches AI to understand and adapt responses based on context, considering previous interactions, user intent, and surrounding information.

So, what’s more important: computing power or efficiency?

Our take: Compute is king, but efficiency will gain importance as scaling laws slow down the progress through brute force. While companies like DeepSeek demonstrate that efficiency gains can bridge the gap, no one in the AI sector believes they have “enough” computing yet.

The contest between compute-intensive and efficiency-first AI strategies is just beginning.

Why should you keep an eye on the rapid progress of AR/VR technology?

AR and VR technologies are undergoing a massive development cycle. Businesses like Meta, Google, and Samsung are heavily funding the accessibility and practicality of these technologies.

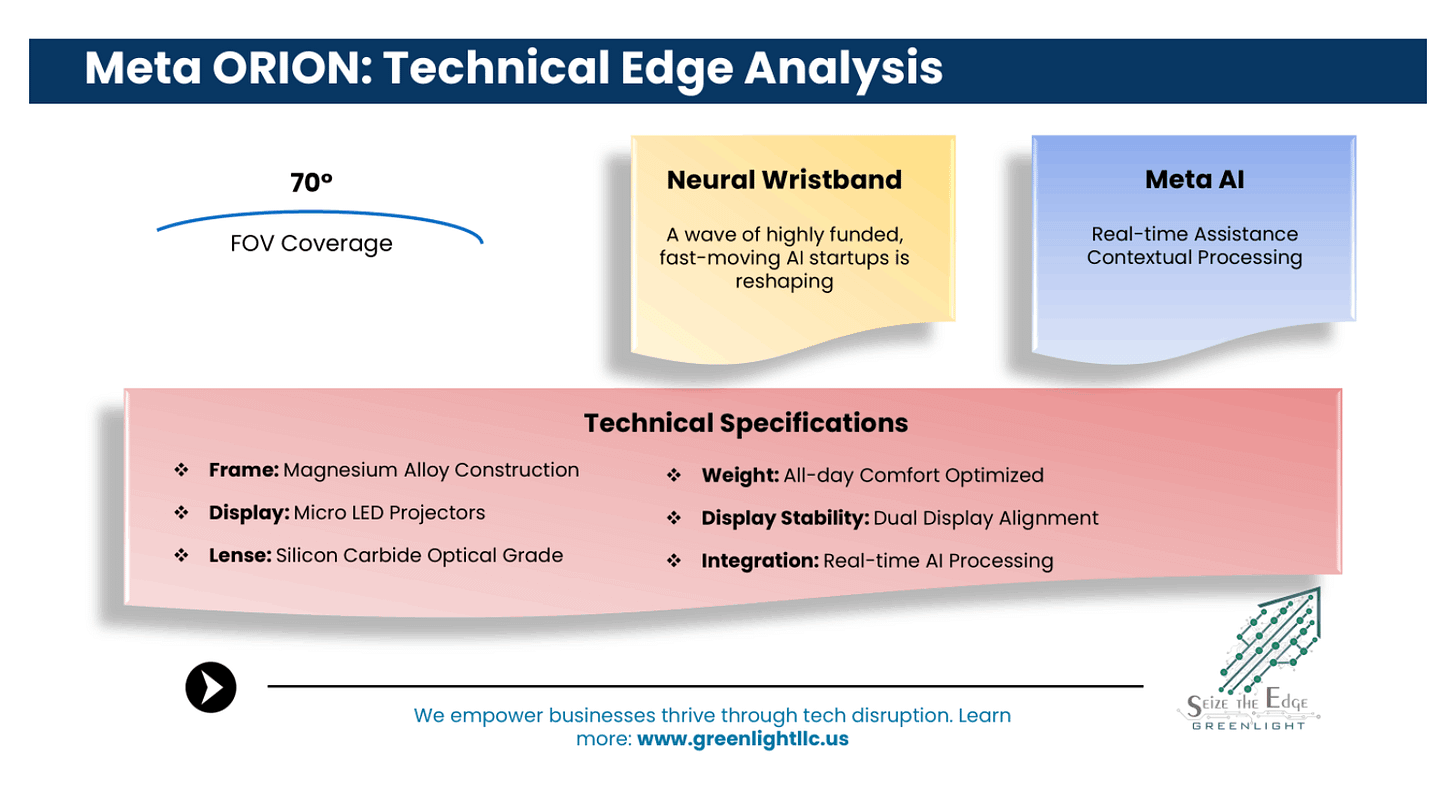

Meta is dominating the AR glasses space with its prototype Orion eyewear. Orion is lightweight and less obtrusive than typical VR headsets, making it suited for daily use. Experts expect that AR devices will become more glasses-like. This transition is vital for broader acceptance as devices become less bulky and maybe fashionable.

Ray-Ban Meta Smart Glasses sold one million units in 2024, demonstrating AI-driven wearable demand. Meta keeps adding AI to Ray-Ban smart eyewear. These upgrades include object identification, live language translation, and music streaming service integration.

By 2025, Meta intends to have cumulatively spent more than $100 billion on VR and AR. Its AR/VR unit, Reality Labs, invested $19.9 billion in 2024 and another $20 billion in 2025. Meta is dedicated to making AR and VR a widely usable technology even though late 2024 results show $5 billion lost every quarter.

Project Moohan from Google and Samsung is notable.

To challenge Meta, Google and Samsung are unveiling Project Moohan, a mixed-reality helmet scheduled for 2025. Operating on Android XR, this helmet will broaden the AR/VR industry and include AI-powered interactions.

Other smaller firms are also striving to make AR/VR more accessible and adaptable. Beyond the big players, several businesses are improving AR/VR glasses and devices. The lightweight and cheap Xreal Air 2 Ultra focuses on screen mirroring. TCL NXTWEAR S offers a pleasant AR experience with a substantial virtual display for entertainment and casual use.

Why should you care as a business owner?

AR/VR applications will significantly enhance operations in various industries and open avenues for new strategic business models. Examples include:

Improved Customer Engagement: Companies can design interactive showrooms and online buying environments.

Remote Work & Training: VR-powered conferences help to enhance learning and communication.

Healthcare: AR and VR have applications in pain management, surgical procedures, therapy, and healthcare professional training.

Education: VR and AR enhance learning through immersive environments and interactive elements.

Marketing and advertising: VR lets organizations create captivating ads and product demos.

AI-powered smart wearables and tools are expected to impact customer and company behavior despite the current challenges for companies like Meta to make a profit. To stay ahead, businesses should consider how AR/VR may improve operations and engagement.

The conundrum facing businesses is negotiating the flood of innovation, especially in AI. What can you do?

The AI development is moving at a breakneck speed. Almost every day, new models, tools, and platforms surface, each seeming to be the next great thing. For business users investigating AI for their growth, it is a cause of mounting concern; it is exciting and overwhelming. Every business right now is grappling with questions like, how can we stay current with this unrelenting rate of change? Where should we spend our time and money? What should we disregard, and what should we adopt?

The challenge is that businesses can’t integrate and validate the applicability of particular AI models as fast as the technology keeps changing. It is best explained through Martec’s law, which states that organizations change logarithmically while technology develops exponentially. This leaves a gap between our realistically manageable capacity and what is possible.

For consumers of artificial intelligence, this discrepancy shows up in numerous forms.

The sheer number of AI tools may paralyze one. Though we research and compare for hours, we often find it challenging to decide. Even if the newest trends don't fit our requirements or priorities, the FOMO Trap—the fear of missing out—drives us to pursue them. We hop from one tool to the next, never really learning any of them. Frustration results from this, and one feels always behind the curve.

How, thus, should we negotiate this AI-powered maze? These tactics are some things to give thought to:

Consume with awareness—ask yourself, I'm trying to fix what problem? Is this product really addressing that issue, or am I being drawn in by the buzz?

Can I feasibly include this tool in my current process?

Emphasize depth above breadth—instead of trying to master multiple tools, focus on a handful that meet your needs and practice them. Much more profit will result from this than surface tinkering.

Learn to say “no”—though intriguing, declining new technology is acceptable. Time and attention are valuable. Avoid FOMO-driven decisions.

The Human-AI Cooperation— Remember that AI is a tool, not a substitute for human acumen. The ideal approach is to use AI with your own intelligence.

The AI revolution is altering the world, and it’s natural to get caught up in the excitement. At Greenlight, we empower you to exploit AI’s promise without being overwhelmed by its rapid progress by being careful, strategic, and discriminating.

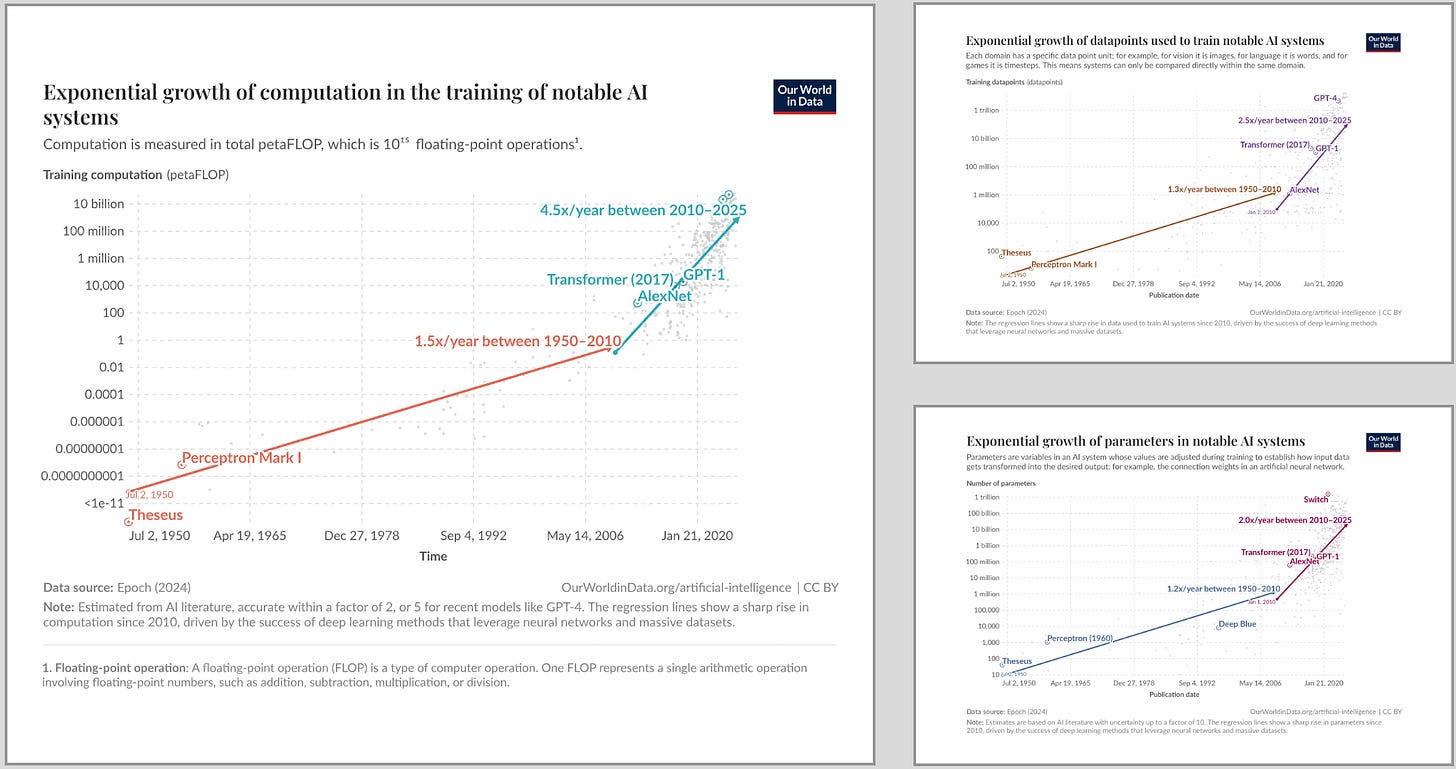

Chart of the Week:

AI’s scaling has been dependent on how much data, parameters, and computational power can be fed to the models. The charts below from “Our World in Data” demonstrate how stupendous the rise has been.

Since 2010, the training data has roughly doubled every nine to ten months. Datasets for training large language models have grown faster, tripling in size annually since 2010. GPT-2 was released in 2019 after training on 4 billion tokens (or 3 billion words). GPT-4 was trained using approximately 13 trillion tokens, or 9.75 trillion words.

Since 2010, the number of AI model parameters has about doubled annually. Large models like GPT-3 have around 175 billion parameters. Epoch's QMoE model has the highest estimated parameters at 1.6 trillion.

Since 2010, compute growth accelerated, doubling every six months. On average, computing doubled every two years from 1950 to 2010. The most compute-intensive model reaches 50 billion petaFLOP.

GPUs, crucial for training, are becoming increasingly powerful, doubling computer speed every 2.5 years for every dollar spent.

Sound bites you should know:

BCI becomes two-way. Chinese researchers created the world’s first two-way brain-computer interface (BCI), which is 100x more efficient than existing systems. This breakthrough allows the brain and computer to learn from each other, improving control accuracy and allowing medical and consumer technology.

AI learns like humans. Researchers have developed a new AI algorithm called Torque Clustering that can learn without human supervision. The algorithm has shown 97.7% accuracy across 1,000 datasets.

Coding for everyone. Replit, Anthropic, and Google Cloud are using AI-powered coding tools to let non-technical individuals develop software. The system has already built over 100,000 applications.

Quantum computing is in the spotlight. Microsoft unveils Majorana 1, the world’s first quantum chip, using a “topological core” architecture. Powered by topoconductors, this chip lays a foundation for one million qubits and scalable quantum computing.

AI buzzwords. What do AI jargons, like reasoning, evals, and synthetic data, mean, and what are their significance? Read more here.

Thank you, for reading! Let us know if you have any feedback. Really appreciate if you could share Binary Circuit and help us grow.

Cheers,

Team, Binary Circuit/Greenlight